[A Note to reviewers: Feel free to pass this link along to other potential reviewers. However, since this is still a work in progress, please refrain from making it public yet. You can reach me at sbura@pobox.com]

Stéphane Bura

![]()

Have you ever tried to come up with new game mechanics? Not just a clever variation of some existing game or an original combination of existing mechanics, but something truly innovative.

If you’re anything like me, it’s a vertigo-inducing experience. There are no maps of the game design space to guide you toward virgin territories and no languages to describe what you’re doing. How can this be when we are so good at identifying innovation? We know that there’s something different in Portal, Braid or Echochrome, but we’d probably be at a loss if asked to explain what it is without describing the actual game mechanics.

This article proposes an answer to this question, a model for describing game mechanics by the types of interaction they create between the player and the game. Along the way, I’ll present a short history of game mechanics and game genres and explain how they pertain to this classification, show which parts of the game design space are underexplored, and I’ll give a few examples of how to innovate in such places.

With such an ambitious agenda, let’s start with a couple of caveats.

Caveat 1: The Problem with Formal Approaches

“One can't proceed from the informal to the formal by formal means.” – Alan J. Perlis

Is it worth it to you to learn a new formalism? Is the expected return on investment high enough that it justifies doing so? I can’t answer this question for you. The feedback I received on a previous work on game design theory made it clear that it’s a major issue. Learning a new formal approach demands a leap of faith. To make these ideas more accessible, I decided this time to adapt existing and recognized models to videogames instead of creating my own framework. However, this approach still requires learning some concepts, even if you’re already familiar with the original work they’re based on. I did my best to keep things clear and simple. You’ll be the judge as to whether this model is worth the effort it takes to master it.

Caveat 2: What this Article Is Not About

There is no map of the game design space. This article proposes one.

It’s obviously not all-inclusive – which would be paradoxical when talking about innovation – but I believe it covers a lot of ground, both explored and unexplored. So, I’m not claiming that this article describes all the possible types of innovation. Indeed, innovation is about making new connections and breaking existing patterns, so no framework could encompass it. What I’m saying is that there’s still a lot of ground to explore within the proposed framework.

Since I will ramble for pages and pages before telling you about my theory, I wouldn’t want you to lose interest before reaching the crunchy bits. So here they are:

If you want to jump to the practical parts of the theory, go directly to Mapping the Game Design Space.

If you’re interested in how I got there, read on.

Considering the continued economical success of genre games that don’t offer innovative gameplay (sports, FPS, RPG, RTS, fighter, action adventure), this may be a fair question. It truly depends on what kind of design goals you’re pursuing.

If your goal is to explore the possibilities of a well-defined genre, new game mechanics might actually be detrimental to your purpose, a subject I’ll expand on in the part about the culture of games. If however your design is about generating meaning through the game playing experience, you might need something that existing mechanics can’t convey. As says Soren Johnson, theme is not meaning. In videogames, meaning is expressed through interactivity. If you don’t have the right systems to express your intended meaning, you must create new ones.

I believe that games can only have a significant cultural impact if players can ascribe meaning to them. In the past, this could be achieved through story or setting alone, but this seems to no longer be the case, or at least to be much more difficult as audiences become more sophisticated. Meaning now requires gameplay.

Talented designers can develop games in which tried and true mechanics convey new meanings, like Jason Rohrer’s Gravitation, but how many new meanings do you think a designer can express by having the player jump on stuff or shoot at stuff? Can you make a shooter about justice? An RTS about honor? A fighting game about loss? Maybe, and maybe these are interesting design challenges, but wouldn’t you prefer using gameplays that put these concepts at their core? Wouldn’t they provide a purer, more effective experience? I believe so.

Secondly, new game mechanics are fun to learn and thus are a big draw for players. How cool was it when you mastered portal jumping in Portal? This article’s title is a humble homage to Raph Koster’s A Theory of Fun where he explains how learning new skills is an integral part of the fun generated by videogames. I agree and will talk more about this below.

Roger Von Oech exhorts creative people to become aware of the mental locks that impair their abilities. He calls mental locks “attitudes that lock our thinking into the status quo and keeps us thinking more of the same.” If this were the way to solve our innovation problem, we’d be compelled to ask: what kind of gigantic mental locks could affect the whole industry and not be perceived as such?

The answer to this question lies in how new ideas come to be. In Les Idées, French philosopher and sociologist Edgar Morin described the conditions that are required so that new ideas can occur to someone:

There is no doubt that the game design community fulfills the first condition. We’re doing okay on the second one although, as a community, we tend to be very respectful of each other’s ideas, at least in public. There are very few iconoclastic designers, probably because our domain is so new. But, regarding the third condition, we do follow an orthodoxy. There are beliefs that we never question and never consider questioning. It’s not for lack of open mindedness, but because these beliefs define what a videogame should be. At least now.

I think that the reason why it is so hard to find truly new game mechanics is that we’ve already found most of the good ones that exist within the cultural framework our industry has adopted. If we want to create new experiences, it’s time to alter this framework. In order to innovate, we need to slay a few sacred cows.

If we were focused on making everything easy to learn, rather than easy to use, we would all be riding tricycles. The bicycle is harder to learn to ride, but much more powerful.

– Doug Engelbart

“Easy to Learn, hard to master” has turned into a mantra. What does it even mean?

On the surface, it means that we want our games to be accessible to new players. A laudable goal in itself, which has unfortunately locked us down a fearful design path. We fear that, if players find the learning experience too difficult, they’ll become discouraged and stop playing. So our tutorial levels are laden with hollow challenges and disproportionate attempts at positive reinforcement. And there’s much much handholding. However, we’re afraid for a good reason: players do quit when they can’t play our games.

Yet jumping on moving platforms is hard to learn.

3D navigation is hard to learn.

Optimizing an economic engine is hard to learn.

Using portals in Portal is so hard to learn that the whole game is basically a tutorial.

Here’s a secret: Learning is not easy. That’s why it’s called learning. It’s a process, an ongoing activity.

Instead of trying to make games easy to learn, we should make them easy to use. It should be easy for the player to experiment so that he can build a mental model of the game world and discover its rules by himself, as Jean Piaget and Seymour Papert have advocated.

Nintendo has a reputation for making very accessible games, but it’s not because they are easy to learn. Think about Mario: It’s a prime example of “teaching by failure,” something that is now considered taboo among designers. You don’t know what will happen the first time you interact with a new object or a new environment, or how a new power will work, and often you’re punished because of it. Like falling off a bike. Where Nintendo outshines its competition is how it approaches player experimentation and mental model building. Every object in a Mario game has a simple and consistent behavior, so that experimentation can be repeated in similar conditions again and again, letting the player choose which experimental factors he wants to modify each time. Furthermore, the world is entirely made out of these objects, which means that there are no additional hidden rules. It’s a closed system and the game rules are fully exposed to the player, all their complexity visible and understandable. Our brains love this. When the rules get more complex, less obvious, we also love inferring them from the results of our actions (as long as we can actually do it). Think about the big successes that are StarCraft, Civilization or The Sims. Sure, one can play these games without really understanding their underlying rules, but getting meaningful results takes a lot of cognitive work. And that’s really fine.

Where else in the world can we get the same clarity, the same level of understanding? Videogames are awesome teaching tools. As Koster has shown us and as Richard Feynman would put it, they offer us the pleasure of finding things out. Making them easy to learn robs us of this pleasure.

Of course, I’m not advocating for making games harder to learn. The aforementioned mantra exists because videogames are hard to learn and we’ve identified this problem.

Why are videogames hard to learn? There are two main reasons. The first one is not of a cognitive nature but pertains to sociology.

Videogames are a unique medium because they put interaction at the heart of the experience they offer, and at the heart of interaction is communication. Our formal understanding of communication has greatly progressed since the fifties, when Claude Shannon first enounced his theory of information. As Yves Winkin puts it, we’ve moved from a telegraphic vision of communication to an orchestral one. This later vision is best embodied in the work of sociologist Erving Goffman who said: “Communication is the performance of culture.”

What Goffman meant is that communication has a purpose beyond its performative aspect, that is beyond transferring information to someone else in order to change his state to a desired one (the Shannonian view). The greater purpose of communication is to reassure all its participants that they belong to the same culture. It is done by practicing the rituals and following the rules that define this culture.

Goffmanian / orchestral communication is mostly about context and metacommunication – the part of the communication that is not about the content, but about the relation between the actors, as defined by Gregory Bateson et al. The metacommunication part of communication conveys information about the nature of the relationship between the actors and indicates how the content part should be interpreted: is the speaker uncertain? afraid? in a dominant position? is the content an order, a joke, an insult? One would think that, when communicating, you could try to go to the heart of what you want, stick to the content part. But Ray Birdwhistell has shown that two actors in close proximity cannot avoid metacommunicating – he calls this property involvement – even if it’s through non-verbal means. So, learning to understand, to recognize and to express the contexts of communication is vital to the members of a culture. Birdwhistell explains that this learning requires that you consider these contexts apart from the communication’s content. He likens it to understanding basketball: you can truly learn how the system works only when you can watch plays without watching the ball.

This knowledge of contexts is how Ward Goodenough defines culture: all you need to know in order to belong. Once you have acquired the shared knowledge of a given culture, you have to apply it so that other members can verify that you belong to their culture. As Birdwhistell explains: belonging is being predictable.

Anyone who has ever worked on perfecting controls or a user interface is familiar with this concept. We pursue the Graal of “intuitive” UI, an interface that is so well designed that it’s fully predictable. Chris Crawford has shown in The Art of Interactive Design how pointless and meaningless this pursuit is. Sure, we can make better UIs, but we can’t expect them to be intuitive. The only kind of interaction you can practice is the one you’ve learned. And to be able to communicate with a computer, you have to learn a lot of arbitrary rules. You may not realize it because you’re so used to it, but interacting with a computer is weird – at least compared to interacting with a human. Nothing in our lives prepares us for that. On the contrary, by interacting with humans, we build all sorts of false expectations about using a computer. Computers display such a high degree of autonomy that it takes us a while to understand that they suck at metacommunicating.

Computers lack the analog signals for showing us how they interpret our commands. A user can’t get clues as to whether he’s doing the right thing for reaching his goal, and even so if he’s doing it the right way. It’s no wonder then that obtaining the desired outcome can seem like the result of an arcane ritual, reproduced without being understood, as if computers were our own Skinner boxes. But computers lack this capacity for metacommunication by design. We want machines that obey our orders or, at best, anticipate our desires. We liberally use the term interaction when talking about what occurs between users and computers, yet we keep thinking about computers with a Shannonian mind frame because computers are built that way. They’ve been designed from the ground up for answering performative commands from their users.

So, if we can’t talk about context with our computers, how can we become predictable to one another? As Goffman explains, when somebody is unpredictable, people try to impose their own rules upon him in order to make him predictable. Since computers don’t understand users enough to predict their behaviors, that’s exactly what they do. By chiding and punishing us for each of our mistakes, by limiting the possible commands we can give them and by controlling our access to the various contexts of communication (or modes in computer parlance), they make sure that we are the ones who become predictable. Since we can’t negotiate with our computers the components of the culture in which communication occurs, we have to adopt their rules if we want to use them. These unbreakable rules become “all we need to know in order to belong” to the culture of the computer. But learning arbitrary rules – rules that make little sense outside of the interaction itself – is hard, even if interface designers do their best to make learning and following these as painless as possible. That’s why Apple or console manufacturers are so stringent regarding their UI technical requirements (e.g. how menus must work everywhere), so that the amount of rules users must learn and internalize remains as small as possible. But the truth is, learning to communicate with a given computer system takes a long time and it’s hard. Most people don’t want to do it again and again. So, in order to join the culture that has been de facto created out of habit, most computer systems use the same concepts and metaphors for manipulating information (files and directories, modal ok/cancel dialogs, scrolling, etc.), only tweaking their particular look and feel. But even these small differences can be enough to cause unease in a user. Goffman calls unease the feeling you get when you perceive that cultural rules are broken by one of the participants. As when a button in an unfamiliar OS doesn’t do what you think it should. Maybe you thought calling “Mac vs. PC” a culture war was an exaggeration.

The reason we put up with this unbalance in the relationship between user and computer is at the heart of the Shannonian approach. According to Shannon, good communication reduces incertitude and produces expected results in the receiver. We put up with this because errors in communication due to incertitude cause costly mistakes. We want our commands to be unambiguous. We want our machine to do what we tell them to do without failing.

Since videogames run on computers, we’ve applied these design principles to our games. In order to play, players have to learn a lot of arbitrary rules that, more often than not, have little to no relation to any other computer-related rules they already know [I’ll explore this learning process later on]. That’s one the reasons why genres are so preeminent in our industry. It takes so much effort to learn a rule set associated with a given genre that players feel it should be worth it (1). If they learn “all they need to know,” players want to belong. They derive pleasure from becoming experts and exploring clever variations, and genres offer them the guaranty that they’ll be able to do that with minimum effort. It’s comparable to readers who exclusively read crime or science-fiction because they don’t want to learn the reading protocols of other genres (even if they're not conscious of that).

Compared to learning a new culture, it is relatively easier to become skilled at new genres, because none of them challenge the underlying rules of computers. They operate at another level.

For games are different. Games can move away from Shannon because games are not about reducing the failure rate of performative actions. Games are about managing the possibility of failure in a safe environment (2) (3). Without the possibility of failure, games wouldn’t be able to generate feedback that shows how well the player is learning to play. And games are about learning (4).

So, if games are not constrained by Shannonian design, is there another way we could envision them? A way that would bring us closer to Goffman’s view of interaction? If players are free to fail, can the culture of videogames get rid of the shackles of the culture of computers?

I do believe so.

Our performative view of interaction has led us to see games not as partners in a conversation but as things we do stuff to. Our wills plunge into the game space and change it. It’s no wonder then that these spaces often take the form of game worlds, places where we can unleash our demiurgic instincts in a familiar manner. The prevailing metaphor of non-puzzle games is one of control, either of an avatar that we can move around like a puppeteer does a puppet, or of a disembodied entity capable of changing its world (SimCity’s mayor, Civilization’s leader, god games, etc.).

This is done for the sake of immersion. The thinking goes that if the player projects himself into a convincing game world through an avatar, he’ll be more engaged in the game, more engrossed. We’re still not sure though whether the avatar should be an empty shell that the player can fill (Master Chief, Link, Gordon Freeman), a strong character he can empathize with (Guybrush Threepwood, Nathan Drake, Manny Calavera), or something in between (Lara Croft, most protagonists in RPGs) (5). What we do not question is the basic tenet that interaction should be done through such proxy. When we play, we play a part or we’re a director of game agents.

This compounds the difficulty of communicating at the meta-level. The only way we can try to convey information about the context of our commands is through our proxy and our proxy’s only use is to change things in the game world. We have to try to communicate feelings, desires, or beliefs through actions. But we have no means of knowing in advance the range of what we can express, or even of understanding what meanings will be associated with our actions by the game. We just hope that some intention will be interpreted the right way. We have to trust the game. But our trust is often betrayed.

For instance, you might think that pointing a gun at a NPC in an adventure game would frighten him. More often than not, for varied design reasons, it doesn’t. But the player doesn’t know in advance whether this will have an effect. With our ever more realistic immersive worlds, we build expectations that can’t be fulfilled. For even if we do implement this one behavior, there are dozens that would logically follow that we don’t (how would the NPC react to a ticking bomb, a display of violence, hearing the “empty clip” sound cue, etc.?). So gamers become cynical about interaction. Paradoxically, since they anticipate games will take all their commands literally and not extract meaning from them, it’s when games do it unexpectedly that players experience unease. Suddenly, the rules of communication change. If this action has a hidden meaning, will that one have a meaning too? Can I identify characteristics common to all the commands that convey a meaning? Since there are no identifiable pattern from game to game, we have to learn this over and over again through experimentation (6).

Designers have tried to address the complex issue of explicitly conveying meaning through action. Solutions so far fall in two categories: canned meaning or canned actions. The canned meaning route is exemplified by games like Black & White or Star Wars: Knights of the Old Republic, where some of the player’s actions are given an abstract qualifier relating to a fixed meaning (e.g. it’s a Dark Side or an evil deed). The player doesn’t choose the meaning he expresses, he can just trigger the ones that have been chosen by the designer. Canned actions are found in games like Heavy Rain or Mass Effect (7), where some actions can have a great depth of varied meanings, but can only be triggered in a context chosen by the designer, like a dialog with specific NPCs at a specific point in the story. The problem here is that, for the sake of immersive realism, each of these interactions is unique. Thus the player has no way of learning the consequences of his choices in advance. Even when the intentions are made obvious by offering options so different they can lead to caricature, the changes in the world that these will provoke remain opaque. For instance, choosing an angry dialog line can lead to anything from slightly altering your relationship with a NPC to shooting him during a cut-scene. As per my definition of what a choice is, if you can’t know the consequences of your actions, you’re not really offered a choice. Maybe the solution to the problem of canned actions would be to find a way to clearly express the consequences of a choice at the time it’s presented. But this would require either a powerful descriptor language or simpler, less “immersive” worlds.

But even if we do find such solutions, immersion traps us into thinking about trading off the player’s expressivity for his agency. This trade-off will remain for as long as we continue to think about the player’s actions as being performative in nature. We have to look beyond action verbs.

The concept of action verbs is incredibly useful because it allows designers to talk about how the player affects the game world (jump, shoot, push, plant, build, collect, harvest, etc.) Once you know your verbs, you have a good grasp of the means with which the player will be able to defeat challenges, and you even know much about what these challenges can be. As a result, it helps you imagine your levels / game world by linking the player mastery of each verb-based skill to the unlocking of content.

But, as we’ve seen, as useful this concept is, it is also extremely restrictive because it impede us when trying to implement metacommunication. By limiting player’s communication to action, we actually shunt out six of the seven topics it can be about.

In The Design of Everyday Things, Donald A. Norman explains the process by which an actor can act upon the world (or any interactive system). Norman’s model posits that an action can be described by seven steps:

And then, the process starts again, in a loop which looks like this:

Norman’s model of action

For instance, you might have some craving for Chinese food (goal) and decide to go eat in a Chinese restaurant (intention). You’ll make sure you know where to find one, get means of payment, transportation, etc. (plan). While going there (action), you might find that the road you wanted to take is jammed, that the restaurant is closed today or that you’re lost (system’s reaction and senses). You adjust to the situation (model update, so that you can generate another plan) until you find the desired restaurant or give up (outcome evaluation and goal change).

This process is recursive (“getting out of a trafficjam” can be a goal of its own that serves a higher goal) and several such loops can run in parallel (you might need to find an ATM before reaching the restaurant).

[If you want to know more about this model, I urge you to read The Design of Everyday Things, since I won’t address all the consequences of this definition nor the exceptions or special cases.]

If we apply this model to communication in videogames, we see how varied the information and meta-information relating to interaction can be. The player could talk about:

Wouldn’t looking beyond action verbs and considering the full spectrum of communication lead to new interactions and thus new gameplay?

It was on a dreary night of November that I beheld the accomplishment of my toils. With an anxiety that almost amounted to agony, I collected the instruments of life around me, that I might infuse a spark of being into the lifeless thing that lay at my feet.

– Mary Shelley – Frankenstein or The Modern Prometheus

For all their talks about shared authorship, game developers are building systems whose purpose is to deliver a finely crafted experience. They will help the player enjoy this experience in two ways: by guiding him along and by making sure he doesn’t mess things up. If there’s any agency, any authorship on the part of the player, it’s within these strictly defined bounds.

If we want to enrich the interactions between player and game, we have to look at games with a different mindset. Instead of thinking of a game as a semi-autonomous object manipulated by the player and reacting to his actions, let’s consider it as a fully realized actor, imbued with a will, with its own goals and methods to attain them. These methods involve influencing the player’s behavior, in ways similar to how the player influences the game’s behavior. We have to move away from the authorial perspective in which the designer has anticipated the possible player’s choices. When the game is running, our Frankensteinian work is done.

My favorite definition for interaction has long been Chris Crawford's:

Interaction: A cyclic process in which two actors alternately listen, think, and speak.

Crawford invites us to consider both actors as equals in the interactive process. Treating games as full-fledged actors is the first step on the road to metacommunication. Thus, if we expand Norman’s model, an interaction loop looks like this:

The game’s steps in this model are not so much about the manipulation of the game’s world. The goal of the game (as an actor) is to create a satisfying experience for the player, so the game’s steps relate to that. Changing the game world is just a means toward that end. The game steps are about the following:

If both actor and system followed this model, we could redefine interactivity using Bateson’s view:

Interactivity: The process by which two actors build models of one another in order to influence each other’s behavior.

Of course, because of our Shannonian constraints and fear of failure, computer systems don’t work like this. Our systems are designed so that they help their users achieve their goals. We don’t create systems that try to change us in order to achieve their own goals. We want machines that anticipate our desires, our intents, not things that act upon us. When you “interact” with an ATM in order to withdraw some cash, for instance, the experience feels more like this:

Interacting with a computer

Most of the steps in the loop are bypassed. You don’t choose your goal: the only high level choice you have is to use (or continue using) the ATM or not. You don’t choose your intentions, nor your plans: you can only follow the script (plan) that drives the ATM’s behavior. There’s no meaningful action you can take other than picking the next step of the script (or going back). Since it’s not something that has to be interpreted by the system but is only dependant on its script, I don’t think it qualifies as an action in Norman’s sense [I know it can sound odd right now but please bear with me. There’s a whole section coming up that details what these steps mean when you interact with a computer.] For similar reasons, because there is nothing for the user to interpret – once he has learned how to use an ATM – the senses step is meaningless: the user simply uses the required protocol (the model he knows) to guide him. The user’s outcome step is the only one that is a bit similar to what Norman describes. It allows the user to change his mind depending on the circumstances, for instance choosing the amount to withdraw once he knows his current balance.

On the system side, the only step that matters is the one that describes the withdrawal script because we don’t want an ATM to do anything else. We wouldn’t want it to have its own goals or to try to imagine what we want to do with our money.

Using content creation software – like a word processor or a painting program – is a bit closer to our model of interaction:

Interacting with content creation software

(Dashes indicate possible alternate paths)

In this loop, the user’s goal is to produce some predetermined content, i.e. it’s not about the purpose of the content nor how the creative process affects the goal. Deciding to change the goal would occur outside of this loop, since it’s not the process of interaction itself that would be the cause, more the produced result.

As with the ATM, the user must follow scripts (e.g. selecting modal tools) but, since he is given more freedom, he has to plan their use – either in advance or moment to moment. Depending on the complexity of the program and his level of freedom, he may also have to decide how he will create the content (User Intention step). He can then modify the content through the system (System Plan) that checks whether the commands are valid and translate them into changes (it’s also where automated correction like spellchecking can occur). Some systems try to anticipate a subset of the needs of their users (System Model) by providing automated steps for their planning process (helpers, wizards, intelligent default parameters, etc.).

As we can see, because of the aforementioned constraints, “interactive systems” confine themselves to a very limited portion of the interaction loop. According to Norman’s and Crawford’s definitions, most systems we encounter in our lives barely qualify as interactive.

My theory is that games can explore the full range of communication options that the steps of the interaction loop offer, and that it’s the key to discovering new gameplays.

Before examining how the steps of the interaction loop can help define gameplay mechanics, I’d like to spend a moment reviewing the history of gameplay innovation. As we’ve seen with computers, history shapes the way we think about things, and videogames are no exceptions. The culture of videogames, in Goodenough’s terms, has emerged from past solutions to interaction-related problems. So have our design mental locks. In order to break them, we must understand what they are and how they came to be. Turns out, it’s mostly Shigeru Miyamoto’s fault (8).

[Note to reviewers: My goal is not to be exhaustive here or too precise. I’m more interested in the trends than in the actual games. I’ll often choose games that made some mechanics popular instead of the ones that introduced them. For instance, before Pong, there was Tennis for Two. If however you think I’ve omitted or misrepresented some games or mechanics, feel free to tell me why so I can amend the article.]

It all started with Pong…

In the beginning of videogames, the game worlds were simple enough that very little amount of communicated information could afford the player a very high level of agency. Pong is the first game that offered the player total direct control over his avatar: You turned the pad’s knob a bit and your paddle moved exactly the right amount of pixels on the screen (9). Pong is also one of the last games that offered this level of control.

The idea of directly controlling an avatar, the core concept of performative commands in games, was born. Many games implemented this, creating the first action verbs (move and shoot): Breakout, Defender, Space Invaders… The big difference between these games and Pong, though, was the move from the knob controller to the joystick. Commands now had to be abstracted and interpreted by the game; there no longer was a perfect equivalence between what the player did and what happened on the screen. For instance, the joystick commands for moving your ship in Defender involve an odd combination of acceleration and speed control that must be mastered before one can excel at the game.

Conversely, the new games moved from providing instantaneous outcome for a given command (Pong’s knob angle and the paddle’s position were isometric) to introducing delays. Ships and paddles in these games had speeds and they had to move to where you wanted them to be, which took time.

These changes give us a hint that these two variables may be of some help for classifying videogames:

If we use them as the axes of a grid, we can see how the original arcade games stand firmly in a corner:

Text adventures and turn-based games like Star Trek showed another way in which player agency could be achieved: More abstract commands that were interpreted by the game in order to generate effects.

These games were about analyzing, planning and anticipating. A goal could only be attained through a carefully thought out sequence of actions, some of them with long delayed effects (like putting an object in one’s inventory so it could be used later). This also allowed for more complex game worlds, since the player could express more complex intents. They sat at the opposite of arcade games in our grid:

There were a lot of brilliant variations in the upper right corner (Pacman, Tempest, Asteroids, Centipede, etc.) but arcade games were ultimately limited by what the player could express. Until Miyamoto invented the jump button.

Parts of Donkey Kong’s control panel and instructions

(cc) Ryan Bayne

The controls in Donkey Kong were so revolutionary that they required a thorough explanation:

The jump action was a very abstract command: it depended on the character’s state and on the stick position. Furthermore, when you pressed the jump button, you lost control of your character for a while, until he had finished jumping! It was unheard of.

The idea of associating physical commands with action verbs that provided slightly delayed outcomes took the industry by storm and led to a multitude of implementations: Using the pump in Dig Dug, Flapping your wings in Joust, Jumping (while shooting) in Moon Patrol, etc.

From there, action games evolution took three different paths:

The popularity of action verbs led to some abuses (like the 26 basic commands of Ultima IV, Computer Ambush, etc.). As they reached for more depth, games with arbitrary inputs soon hit a complexity wall.

To combat the verb glut, the mechanics for adventure games evolved by providing more direct – and less powerful – controls in games like Maniac Mansion (11).

Meanwhile, simulation games went in the opposite direction, offering more complex worlds to interact with and making economical systems part of the gameplay, like trading in Elite and production management in Dune II. Waiting for results / anticipating medium- to long-term outcomes in dynamic systems was a new experience. Giving a movement order to a unit in a RTS is not semantically different from making Mario jump, but the time delay involved in the former makes for a very different experience, allowing for parallelly processed commands and thus tactical and strategic thinking.

While graphical adventure games tried to limit the number of their verbs, the fighting game salvation came in the form of the combo, a more manageable model for abstract commands. Some of the sequences of buttons and their relationship with the effect they produced more or less made sense, but sometimes they felt totally arbitrary to the layman. Combos shifted fighting from pure physical skill games to games where the essence was real-time planning / anticipation – and so they became more tactical. Note that combos introduced a complex continuum along the directness axis, from “logical” combos (that can be understood from their components, like “Down” + “Leg Attack” = low sweeping attack) to abstract ones (that you just had to learn by heart, like a Shoryuken).

I cannot pin the invention of combos on Miyamoto but he certainly popularized it for non-fighting games. The triple jump in Super Mario 64 is a textbook example of how action verbs are implemented in today’s games. But what Miyamoto did invent next had many more repercussions, solving the complexity problem for RPGs and adventure games: The contextual button in The Legend of Zelda: Ocarina of Time changed forever the idea of how we interact with virtual worlds. It was a strange combination of providing the player with more interactions but giving him less control over them, of increasing freedom to plan while decreasing agency at the action level. This was balanced by the expansion of the item-based modal action gameplay that was already a staple of the series, each equipped item giving access to a gameplay subsystem. Finally, another innovation was the automated jump, in which the game no longer even required explicit input from the player to perform a verb action. Agency was delegated to the game! (12)

And, like Miyamoto’s previous innovations, these quickly spread to other genres, soon becoming standards: contextual pointers in (now purely) graphical adventure games, automated modal grab in later versions of Tomb Raider, combining combos and contextualized commands in God of War or Prince of Persia, etc.

As time passed, design stretched the boundaries of the main fields in the grid.

Because it’s associated with immediate outcomes, blue is mostly the domain of physical mastery. It moved to the left toward more abstract commands with rhythm games and Quicktime events.

The red region extended the notion of action planning mixed with delayed outcomes to more abstract gameplay, like Match-3 games, or making delays part of gameplay, like FarmVille.

The green region is about sub-system mastery and its expansion toward abstraction took the form of crafting mechanics or ritualized gameplay sequences, as in Phoenix Wright.

There are many old and recent games that filled some of the remaining holes in the grid, but I need other qualifiers in order to explain how they came to be so unique. Meanwhile, the grid currently looks like this:

[You can read the appendix for a further study of innovative but atypical game mechanics. I’d advise you to read the following two sections first, though, as they explain concepts I use in the appendix.]

It is interesting to note that, apart from RTSs that combine a lot of different systems, there is little overlap between game genres in this graph. This may be another clue as to why genres are so prevalent in our industry: they each offer a different answer to the very complex problem of player agency, answer that often comes from the evolution of many previous concepts.

Ironically, the last of Miyamoto’s innovations, after influencing the whole graph, is back in the top right corner with Wii Sports.

We can see that there are still some holes in this grid, or places with very few games. Does this mean that these could be a nice place to find new game mechanics, new concepts for player agency? If so, we still lack some information about the nature of these places, what they imply about the game mechanics that should end up there.

Since game mechanics of very different natures are neighbors, we also have to wonder if a place that is already occupied can receive new kinds of game. And if so, which ones?

Decreasing Degrees of Directedness

Descending Degrees of Immediacy of Outcome

Looking at the previous grid, we can see that there is some correlation between high immediacy or directedness and high game speed. By game speed, I mean the speed of the interaction loop, or how often the player is required to act in order to reach his goal.

The relation looks a bit like this:

However, it’s only a strong trend. There are exceptions, like Hidden Object Games or FarmVille that have respectively high immediacy and high directedness but also minimal speeds. This tells us that it may be worth exploring variations along the speed axis in our search for new game mechanics.

As it turns out, it’s one of the easiest ways of generating new ideas (at least for me) since it helps you reconsider the other dimensions with a clear design constraint. [See the examples in the following "Innovating by..." sections.]

Descending Degrees of Speed

As I’ve shown above, the quality of an interactive experience is altered when shortcuts are made in the interaction loop. I’d go further and say that this quality can be described by the kind of shortcuts the interaction involves. In my introduction, I wrote: “We know that there’s something different in Portal, Braid or Echochrome, but we’d probably be at a loss if asked to explain what it is without describing the actual game mechanics.” The shortcuts in the interaction loop provided me with the vocabulary for describing the nature of what is new in these games.

If we say that game mechanics are made of gameplay elements – the smallest constituents to which we can ascribe meaning - shortcuts in the interaction loop provide a description of the purpose of each gameplay element.

But first, how can shortcut even exist? Norman’s model seems to indicate that a person goes through each step for each of his actions. Actually, it’s not true. A lot of our knowledge about the world is chunked, so thoroughly integrated that we don’t consciously think about it when we use it. We bypass the analysis of the now and trust our knowledge forged in the past. We match patterns while observing the world and use the stored scripts and procedures that they retrieve in our brains. For instance, when we’re about to leave our home, we don’t spend any time perceiving the structure of the door's handle, modeling how the door works (should I push or pull?), the amount of strength it requires, etc. Instead, our hand automatically finds the handle, operates it and opens the door. The whole thing can even occur subconsciously if we’re busy with something else. We may even have taught ourselves a special version of this script, like checking that we have our keys in our pocket before closing the door behind us, and that too can be executed subconsciously. In fact, the vast majority of our actions works like this. We only use the full seven steps when our chunked knowledge is not enough to deal with our needs: when faced with an unexpected or new situation, or when learning.

When we open our door to leave our home in the usual context, the shortcut in the interaction loop is from our Intention step (we want to leave and that’s how we’re doing it, as opposed to jumping out the window) to the world’s Action step (we want to directly change the physical state of the world and be on the other side of the door).

Shortcuts link the steps that matter to the actors in the current interaction. That’s why all the shortcuts don’t go through the human’s Action step. Opening your door is not a feat of action. It’s the reason why you do it that matters. Similarly, in a game, it would seem that interaction would require going through the player’s Action step – in order to actually generate some inputs for the game. Actually, the player’s Action step is more about the player’s freedom of action and physical skill-based gameplay. When you choose to press either the “OK” or the “Cancel” button in a game, it’s no more a feat of Action than opening your door, it’s you making a choice based on your understanding of which changes will occur in the game, a direct application of how good your model is. Thus, it makes much more sense to represent this by a shortcut coming from the player’s Model step instead of his Action step, even if pressing a button is an action (15). This thinking applies equally to all the steps, either on the player’s or on the game’s side.

Here’s an example of a shortcut specific to games, the quicktime event or QTE. In a QTE, the player has a short amount of time to generate an input or an input sequence specified by the game. He has no choice in the matter nor can he influence the consequences of this interaction (which are often unknown, except when the QTE is part of a recurring gameplay system). Thus, the game forces the player to perform a skilled action and is only interested in checking if he does what he’s supposed to. By our previous definitions, this means that the shortcut for a QTE looks like this (16):

Shortcut generated by a Quicktime Event

From now on, I will use the following notation for shortcuts: {Game Outcome => Player Action}

When I introduced the steps of the interaction loop, I tried to explain what they were about in the general context of communication. I’d like to revise these descriptions and adapt them to the specific context of the interaction between a player and a game, so as to make them more useful for our purpose. It’s possible because we can have some confidence when making assumptions about what’s going on, the goals of the two actors, theirs means, the obstacles they must overcome, etc.

Since explaining the 14 steps in details – as well as their roles as origin or destination of a shortcut – takes a lot of space, I’ve put everything in the appendix. Read it at your leisure.

Using this mapping method, a gameplay element can be positioned in a five-dimensional space whose axes are directedness, immediacy, speed and the two ends of a shortcut in the interaction loop. A single point could be enough to qualify a game system. Complex game mechanics – like the manipulation of light in Closure – could however require several points.

We can now fully describe where QTEs stand in the game design space:

Quicktime Event

These coordinates gives us a basis for speculating about what other kinds of game elements reside in the vicinity of QTEs. How could QTEs evolve or mutate if we modified one or several of these values?

Let’s see.

If we want to increase directedness, we must provide the player with a way of expressing some kind of meaning. It could take the form of a choice between two commands, two simultaneous QTEs whose outcomes are predictable because they use the language of interaction the player is familiar with. For instance, a huge monster is jumping at you and you can only react by crouching or throwing a grenade.

If we want to decrease immediacy, maybe QTEs could generate postponed cumulative effects. For instance, the status of an avatar could depend on how often he performs the correct QTE-driven greeting rituals when meeting NPCs. Failing the social QTE could impact on the following conversation with a NPC or have no immediate effect.

If we want to decrease the speed, we can make the input non-modal, maybe by shifting it to buttons reserved for this use. The "QTE" opportunity would last a few seconds to a few minutes, like a boost the player can only use during this time window. For instance, upon entering a ballroom, a “first impression” boost becomes available that can be triggered on any NPC in the room, launching a unique action (“Your eyes do not deceive you, I am the legendary masked avenger!”). The chosen NPC may have a unique reaction too. If the player waits too long, NPCs have gotten used to his presence – it may then trigger other NPC reactions. A time gauge would be useful for this kind of QTE.

Changing the shortcut means altering the purpose of the interaction, so it could lead us to very different places in the game design space. Let’s however try to find a couple that are still close to the spirit of the QTE.

{Game Model => Player Action} could mean that the player is given a short amount of time to tell the game about how he feels or what he thinks. After a meaningful change occurs in the game world (e.g. a vanquished enemy), the player can signify that he found this experience satisfying (maybe by pressing up on the d-pad). The game would adjust its model and tailor future content ({Game Model => Game Goal}) to suit the player’s tastes. The quick input may be required because the game is fast-paced and the QTE is non-modal, so that there’s no ambiguity as to which change in the world the player is talking about.

{Game Outcome => Player Model} could have the game test the player’s knowledge of the game world with an interrupting multiple choice question. Since it no longer involves the Player Action step, this element doesn’t have to be quick (the question could be timed or not), but it can still be a modal interruption generated by the game. This could be used to refresh the player’s knowledge (before a specific challenge) or test whether a tutorial is needed. This could take the form of an interrupting communication from a drill sergeant (“Which of your weapons will you use against those damned fiery bugs?”).

If we changed both extremities of the shortcut, {Game Plan => Player Plan} could be a modal dialog that asks the player to select which of several tasks or behaviors receives the highest priority and will be executed first, or will become the dominant one. For instance, the player sends a scout NPC forward and the game interrupts to ask him for his favored method of movement for the scout: stealth or speed. This gameplay element makes sense for choices that the player cannot easily change – if, in this example, he cannot communicate with the scout before he comes back.

We can also use this method for transforming high level mechanics. For instance, let’s say that we’d like to explore variations on DROD. DROD is a fully deterministic turn-based dungeon crawler. As such, its coordinates are:

DROD

DROD

DROD has a unique position in the game design space because of its unusually low speed, in spite of providing high directedness and immediacy. If we were to increase its speed significantly, it would probably require that we move toward simple commands and {Player Action => Game Plan}, in Gauntlet’s territory. Since this region has been pretty well explored already, let’s try instead to move to the other corners of the directedness vs. immediacy grid.

A high directness, low immediacy version of DROD that preserved its underlying mechanics would add delayed effects or effects that take a long time to generate. Set collection of visible items seems appropriate for this game because it fits the planning theme. For instance, there could be colored gems in a room. Collecting all the gems of the same color (or five gems of this color, or three in a row) would trigger the spell associated with the color.

A low directness, high immediacy version would introduce uncertainty as to the consequences of an action. If we want to preserve the deterministic aspect of the game, we could add known but unpredictable secondary meanings to the player’s actions. The player would be aware of the secondary meaning before making his choice this turn (determinism), but he wouldn’t be able to predict which secondary meanings would randomly be associated with his commands on the next turn (low directness that affects the Player Plan step). In DROD, the only available command is choosing the neighboring tile in which you want to move your avatar (or passing). After each of the avatar’s move, this version of DROD would add an effect chosen at random to each of the neighboring tiles (showing its associated icon), to be triggered if the avatar moves there on his next turn. Possible effects: shoot an arrow in your current direction, move one more tile in that direction, freeze nearby enemies for n turns, pass next turn, no extra effect, etc. This would add a “press your luck” element to the game, inviting the player to try to reach places that would be deadly under the normal rules.

A low directness, low immediacy version could be a combination of these two ideas: collecting spell components from a random offering in the neighboring tiles. The spells could trigger on completion or the components could become part of a persistent avatar profile, modifying his abilities. These modifications could be fully deterministic (10 collected opals let you pass through poisonous gas) or would alter the probabilities of generating effects, like when building your deck in Dominion.

DROD variations

I’m not saying that all of the above mechanics would generate compelling gameplays – I came up with them while writing this section, so they’re pretty rough – but, as far as I know, they’re new and I wouldn’t mind playtesting at least a couple of them.

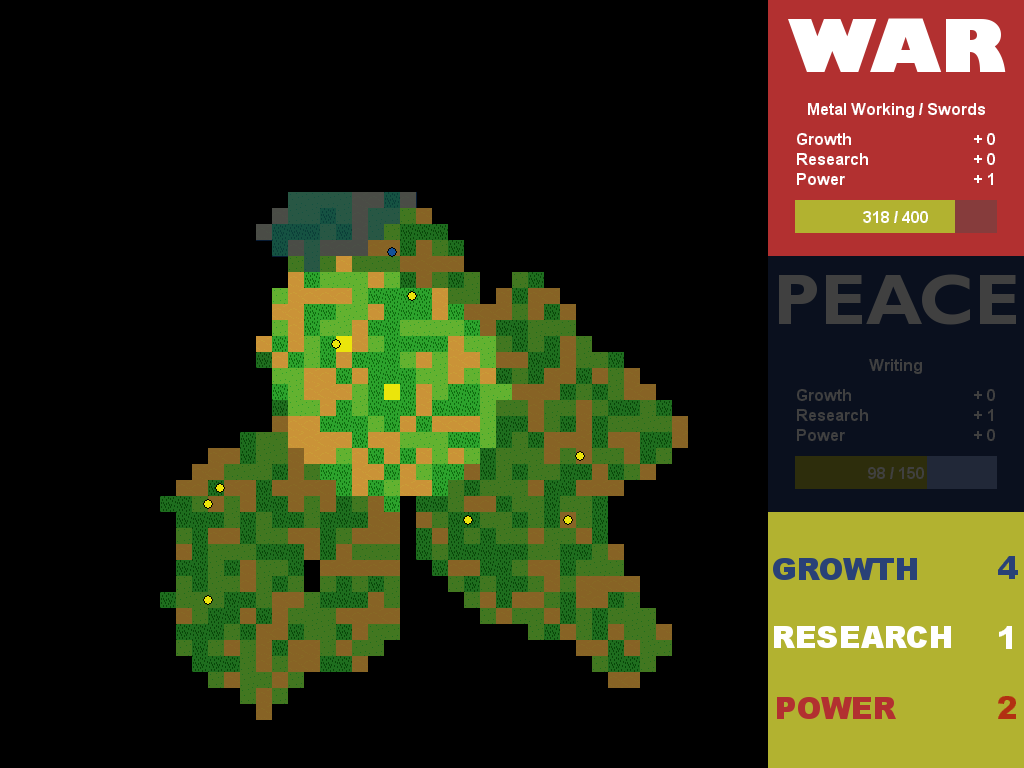

I developed War and Peace for Gamma 4's One-Button Games challenge. The design constraints for this competition were:

Since I wanted to make this development a test case for my methodology, I decided that I would be shooting for extremes on the directedness vs. immediacy grid. This led me to ponder about the maximal amount of meaning I could pack into a single button press.

If I discarded the idea of “cheating” by associating several different commands to the different ways the button could be handled (pressing, holding, releasing, patterns, timed choices, etc.), it meant that I would only have one command in my game. If it were to be packed with meaning, that command would have to be heavily interpreted and thus reside on the far left of the directedness axis. Even so, I wanted to give the player significant choices, so I didn’t go down the QTE road. This limited the kind of consequences I could associate with the player’s actions: they had to be part of regular gameplay and, as such, be more or less predictable (once the player understood the game’s model).

At the time, I couldn’t imagine how I’d do that in the upper left corner (high immediacy), so I moved to the lower left (low immediacy). Commands would have medium to long term effects on an ongoing process that wasn’t controlled by the player.

This corner of the grid is home to empire builders and god games. Could I make a one-button Civilization?

The other design constraint, making a short game, gave me the key. Usually, the game elements in this corner of the grid have very low speed. Increasing the game’s speed – and its ongoing process – would allow me to give meaning to when the player used the command without making the game boring.

As for the shortcut, as I’ve discussed before, I’m a big believer in letting the player explicitly state his intention (see also Totems below), so I wanted to try that: the player’s intention would indirectly translate into changes in the behaviors of objects in an ongoing process controlled by the game. Which gives us the game’s ID card:

Finding what the command should be was not much of a challenge, thanks to the countless hours I’ve spent playing Civilization IV. In order to pursue your currently chosen victory condition in Civilization, you have to constantly answer the question whether you’re expanding your civilization or going to war. So that would be the moment-to-moment high level choice the player would be given: switching his civilization between peace (growth, acceleration) and war (conquest, power) states. It didn’t hurt that War and Peace was a cool title for this game.

War and Peace

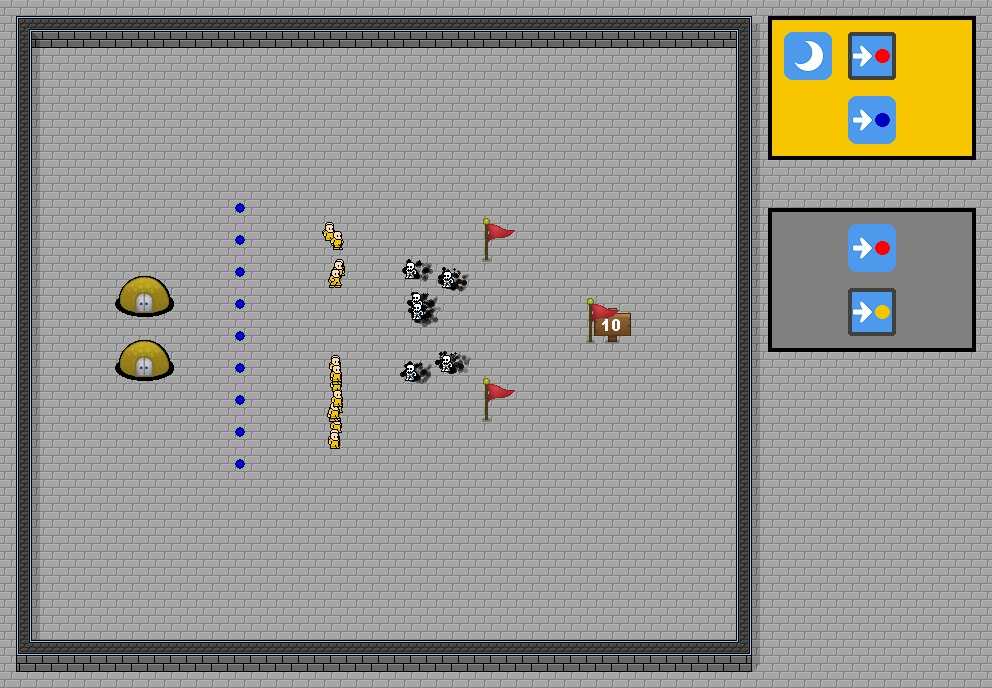

Looking at the directedness vs. immediacy grid, I noticed that the bottom right corner (high directedness, low immediacy) was mostly empty, so I tried to design a game that would fit this space. The result is Cortical.

Cortical

I decided to go with what the “typical” characteristics for a game in this corner would be: high speed and focused on action planning and behavior manipulation. Which gave me the following coordinates:

The reason why this spot in the game design space was deserted made sense. In order to plan efficiently, you either need time (lower speed) or you need the game to interpret your commands so that you can express complex orders quickly (lower directedness). You can’t simplify your behaviors too much either, because that would change the nature of the shortcut (aiming at Game Action – directly changing the state of the world – instead of Game Plan).

So I needed a high speed game element that modified game objects’ behaviors in a fully predictable way. I addressed this problem by exposing the gameplay behaviors to the player. He would be able to pick game behaviors for the game agents in a non-ambiguous fashion (maximal directedness). All the agents would follow the same rules and have their behaviors exposed, so that the player can have complete knowledge of what’s happening (and what’s possible) in any given level. Pure Player Plan.

Like DROD, Cortical is fully deterministic, but it plays in real time.

These design choices led to very interesting discoveries – I invite you to play the game in order to understand more fully what I’m talking about. For instance:

Cortical is the simplest expression of a thought experiment, but its underlying principles could be used in more polished games: Real-time behavioral puzzles, explicit high-level commands that have a medium to long-term effect on an ongoing process, like diplomacy, setting the mood of a conversation, political or economical leanings in a simulation, etc.

This gameplay could easily be adapted to a RTS game, where units can receive orders to attack and chase specific kinds of enemy units, escort other units, build certain kinds of buildings, etc. Orders could also be more complex, involving sequences of actions or conditions. It could be used to handle companions in an action game or a role-playing game, while not feeling like it’s programming or as complex as Final Fantasy XII’s gambit system.

One could also explore the AI possibilities behind exposing behaviors to agents. Going back to level 10, what if an agent could anticipate the effect he would have on another agent that perceives it and plan accordingly? What kind of AI could solve this level? This AI would definitely be able to dynamically draw an enemy agent, or even the player, into a trap.

I supervised the design of Totems when I was 10tacle Belgium Studios’ creative director. Our goal was to make a AAA parkour & fighting game with magical super-powers.

Given the fluidity of parkour, I wanted our game to focus on grace and ease of use. Our design motto was “It should be easy to do cool stuff.” I didn’t want the controls to be challenging. This game wasn’t about the player skillfully performing tricks. It was about controlling a hyper-competent and deadly parkour artist. I wanted to recapture the exhilaration I had felt performing my first triple jump in Super Mario 64 or my first “shooting while doing a back-flip” in Tomb Raider.

But in order to achieve this goal, I had to vanquish my enemy: the jump button.

The concept of Totems wouldn’t have existed without Prince of Persia: The Sands of Time. I was thoroughly impressed and inspired by this game, but I didn’t want to make a game that relied so much on the player’s mastery of the controls or that offered so few choices during the parkour phases. I wanted to give the player more freedom. In Sands of Time, most the parkour actions (besides wall running) are triggered by the jump button and are contextually interpreted to fit the environment, from jumping off a column to vaulting off a horizontal bar. This means that the jump button serves two very different purposes: it combines the intention (I want to pass this obstacle) and the action (I’m interacting with this object). Using a jump button would confine us to a platformer gameplay because the player wouldn’t be able to express his intentions using the core mechanics.

Incidentally, Sands of Time’s designer Patrice Désilets was struggling with the same problem when working on Assassin’s Creed. His solution for letting the player express his intentions was rather elegant: he introduced a user-maintained mode (what Jeff Raskin called a quasimode) that the player could use to qualify the action buttons. By holding a trigger, the player could switch to the “high profile” quasimode in which one of the action buttons acted more or less like the jump button in Sands of Time. In order to make the relation between the commands of the high and low profiles understandable to the player, Désilets used a simple metaphor: each button was associated with a part of the avatar’s body that the player controlled like a puppet; one for the head (observation), two for the hands (attacking, grabbing, pushing), one for the legs (sprinting, jumping, sitting).

Totems didn’t have the same needs as Assassin’s Creed (the avatar in Totems was always in “high profile” mode). I wanted to let the player quickly and directly express his intentions with buttons and have the game contextually translate these commands into maneuvers that could be chained. Which put me in this part of the game design space:

Totems – Super-Powered Parkour

The difficulty was finding the right commands that the player could associate with both intentions and actions. Yves Grolet (the studio’s CEO) suggested that we use animals as our metaphor, since some animals have very strong functional associations in people’s minds. I went ahead and decided to push this idea to its limits: animals wouldn’t be the qualifiers for verb-based commands, they would be the commands themselves. Pressing a button would make the avatar act as if the corresponding animal was controlling her body and was lending her its powers. So the game became naturally about a parkour-skilled shaman and her relationship with her guardian spirits.

We ended up with the following commands / spirits and their functional associations:

Totems’ controls

Here’s an example of how it worked. Let’s say your avatar is running toward the edge of a roof. Pressing each button triggers a different contextual action that reflects the nature of the associated spirit:

Four different intentions when jumping in Totems

Instead of having a jump button, Totems had four commands that you could choose from for telling the game what you wanted to do in a jumping context.

You can click on the image below for a video of this gameplay.

Bouncing Cheetah Jump in Totems

(Click image for a video of Totems’ gameplay)

This metaphor also translated to combat, with Monkey dodging and ridiculing enemies (à la Spider-Man), Cheetah providing fast attacks and quick target changes, and Bear delivering slower but more powerful crushing blows.

Totems did not have action verbs at the heart of its mechanics. It had adverbs that gave meaning to its action contexts.

Once you’ve designed and developed your innovative game mechanics comes the tough part of helping the player understand and adopt them.

The second reason why games are hard to learn – besides defining their own culture – is that teaching new shortcuts is hard to do well. Per definition, it’s teaching a new way of interacting. Bateson wrote that during interaction, actors not only communicate about its context (“I’m your boss, you owe me respect”), but they may also do so about the context of the context (“By saying what I’m saying I’m challenging your authority”), and so on ad infinitum. That’s what shortcuts are: contexts of contexts of interaction. They don’t talk about the game content; they don’t even talk about the experience of playing. They talk about how you shape this experience. To get the player to access this part of his brain, you have to ease him into it.

Games are intrinsically hard to learn because players have to learn four things at once: how to use the game and interact with it, how the game works and its rules, what the game is about and how to have meaningful exchanges with it. New players are learning the language of interaction while using it to accomplish goals. It’s like learning to drive while competing in a race.

The difficulty is compounded by the limited tools the player is given to experiment with the game and build his own model. [On that subject, see the part about epistemic actions in the Player Model section of the appendix.]

That’s another reason why genres are so persistent in our industry. When a player comes to a game through its genre, he is likely to look for what he expects to see (the common features in RPGs or RTSs). Innovation then becomes the difference between what the game offers and the player’s expectations. This is an easier path for the designer because it brings innovation front and center for the player and frames it in a context he already understands (e.g. Active Reload in Gears of War). This is one of the paradoxes of innovation: the closer a game is to the player’s expectations, the easier he’ll adopt its new features.

Game genres are subcultures that encompass the workings of all the aspects of their games: interface, controls, acceptable behaviors and feedback, goals and outcomes, saving progress, etc. These categories have been defined at a meta level - the culture of videogames - and are seldom questioned or changed. Indeed, the cost for doing so is even higher than proposing a new genre. So, most of what we call experimental games still stay within the accepted rules of the meta level. For instance, there are unwinable or "pointless" games that offer a commentary on the experience of playing (Achievement Unlocked, You Have to Burn the Rope, You Only Live Once), games that turn predictable behaviors on their head (I Want to Be the Guy, Karoshi), etc. Even so, it's interesting to note that such games almost always stay within the confines of a genre (more often than not, the platformer). It's hard to mess with both the meta and the genre levels. You need shared vocabulary and syntax in order to communicate. That’s what genres provide.

So how do you innovate beyond genres or so radically within a genre that it changes some of its subculture’s rules? Slowly and thoroughly.

Innovation calls for a holistic approach to game design. Since you’re teaching the player a new way of thinking about interaction, until you know that he has acquired the new model, every aspect of the game should be about the innovative parts. Experimentation should be facilitated and encouraged. Story, setting and theme should all support the gameplay and offer metaphors for manipulating its concepts. Think about Katamari Damacy. Think about Portal.

The puppet metaphor in Assassin’s Creed carried over to the game’s story and setting. In Totems, the spirit metaphor shaped its world and story. For instance, the game took place on an island divided in four big districts, each dedicated to furthering the understanding of one of the spirits with the appropriate architecture and gameplay. These districts were placed in the same position on the island as the corresponding buttons on the pad. The story was about bringing balance to a cosmos whose symbolic representation shared the shape of the island and the pad. The team built a technology and production pipeline from the ground up for implementing intention-based contextual actions. Every aspect of the game was tied to the new interaction model.

Lastly, when teaching the player, be kind and forgiving.

Goffman explained that there are a few principles that govern both the preservation of social order and interactions. A majority of those deal with how actors handle transgressions of communication protocols. Someone who doesn’t follow the rules is seen as clumsy and generates unease. If he persists, he is viewed as faulty or as a deviant. He feels ashamed, guilty. His interactor can feel insulted, chocked, provoked, or impatient. In order to establish the status quo in the relationship, the latter must show some form of forgiveness (real or feigned) when the former finally complies.

That’s how we’re trained to approach culture and that’s how we feel when we fail at interacting, even with a game. So the game has to acknowledge this, show kindness and be a partner in the learning process, not an uncaring judge of the player’s performance. For instance, Chris Crawford suggested that the machine should take the blame when an interaction fails (“I can’t seem to be able to open this door. I must be missing something.”) instead of admonishing the player (“You need a key to open this door.”). It’s subtle but communication is subtle. It makes all the difference between a pleasing learning experience and a frustrating one.

Good new ideas are hard to come by. You shouldn’t be ashamed to spend all the time you need to find them.

Innovation is scary. It’s expensive, it’s risky, it’s hard to market…

What I aim to do with this work is provide game designers with tools that reduce these factors a little bit, maybe enough that their ideas will be given a chance.

Will you make the leap of faith and apply this theory to your design? Ultimately, my taxonomy may not work for you; you may need your own. But I hope I’ve convinced you that a formal approach to game design innovation is possible.

Children are now born in a world of games. They’ll want, they’ll need games that say meaningful things about their lives. And they’ll make them too. The videogame medium transcends languages and cultural boundaries. With the advent of cheap connected computers for children in developing countries, these cultural barriers are about to totally break down. Who knows what wonderful new ideas these children will turn into games? We can guide them along this path, show them how it’s done.

I have no doubt that videogames will change the world. Great things are ahead.

[Todo. Many, many]

[Note to reviewers: This part is still under construction. Proceed with caution.]

This step is about the player receiving new information about the content and the state of the game. Such information can take many forms: audiovisual cues, time since the beginning or end of a stimulus, coded information (gauges, graphs, symbols, etc.), natural language, vibrations, perception filters (highlights, see-through, etc.)

Commonly, this information is about one or several of:

The common factor between these is that this step is about perceiving meaningful information (either in the game’s world or for the player), not just noise. This means that it can also be about aesthetics.

Anything related to active manipulation of perceptible game information by the player. For instance, the player can turn some information filters on and off, choose camera views, look at statistics, get hints about objects in the world, review mission briefings, etc.

Brilliantly exploited in Splinter Cell - Pandora Tomorrow’s multiplayer mode (asymmetric views, vision modes, sticky cams, AR markers, etc.) {Player Senses => Player Plan}

It’s also about putting perceptible information in the game world that addresses metacommunication or another step of the loop. In multiplayer games, this information may be perceived by other players. For instance, the ability to spot enemies for your teammates (and creating AR targets) in Battlefield Bad Company 2 {Player Senses => Player Plan}, and the communication markers in Portal 2’s co-op mode {Player Senses => Player Intention}. Also, taking notes in The Legend of Zelda: Phantom Hourglass {Player Senses => Player Model / Player Intention / Player Plan}

Hidden objects games {Player Senses => Game Outcome}

Sorting game in WarioWare {Player Senses => Player Action}

Looking for hidden patterns in a 3D scene by modifying the viewpoint (Starlight 2, Toys, “?” Riddler Challenges in Batman: Arkham Asylum) {Player Senses => Game Outcome}

Echochrome 2 {Player Senses => Game Outcome} and {Player Senses => Player Plan}

Kinect is ripe for much exploration here.

The shortcut’s origin causes the game to add, remove or alter information dynamically in a way that affects the player’s experience. For instance, turning the screen white and playing a high pitched noise for a while when the player’s avatar suffers from the effects of a flash-bang grenade {Game Action => Player Senses}; displaying the avatar’s hallucinations {Game Plan => Player Senses}; giving hints by highlighting an object in the world or having the avatar notice it (as in The Legend of Zelda: The Wind Waker {Game Plan => Player Senses and Model}); playing the “boss theme music” in anticipation of an upcoming and unavoidable big fight {Game Outcome => Player Senses and Outcome}; etc.

Queries about the player's perception. For instance, asking the player to tweak his TV's luminosity in Dead Space {Game Outcome => Player Senses}

Prosopamnesia {Player Intention => Player Senses} with minimal directedness.

Closure only what is seen (illuminated) is solid in the game world {Player Plan => Player Senses => Game Action}

This step is about the player learning the operational and constitutive rules of the game, the properties and behaviors of its objects, the resulting patterns, how game subsystems work, interact and react to change, the resource flows, the story, etc. It’s the knowledge he uses to form goals and to make plans. It is very important to distinguish the acquisition and updating of this knowledge from its use: it’s the difference between training and competing in a championship.

This is also where the player learns the interaction protocols of the game and how to recognize in which state the game is – either at the communication or at the metacommunication levels.

Plans that are proven to work in specific circumstances become part of the model – they become chunked and no longer require a dynamic evaluation from the player – as do exploits, popular strategies and player rituals. So, the model is not only about how the game works, but also about how it is played.

This step covers all the cognitive aspects of Bloom's taxonomy of learning domains.

This range is so vast that it causes design issues. For instance, games often ask the player to access the evaluation behavior before being proficient in the preceding ones – like choosing which avatar skills to upgrade before understanding how these changes will affect his possibilities.

Games let the player learn by doing. A shortcut starting from the Player Model is about letting the player experiment and about increasing his ability to test conditions in the game through epistemic actions. To quote Paul Maglio and Michael Wenger: “Epistemic actions are physical actions people take more to simplify their internal problem-solving processes than to bring themselves closer to an external goal state. In the videogame Tetris, for instance, players routinely over-rotate falling shapes, presumably to make recognition or placement decisions faster or less error-prone.”

When a player is learning a game and its controls, it’s difficult to differentiate epistemic actions from experimentation and from goal-related actions. For instance, playing a 3D platformer for the first time, moving toward a goal can satisfy the three purposes (testing the relation between stick incline and character speed, moving in a winding path to get a sense of the camera’s depth of field and behavior, and getting to a goal). Each of these processes can be improved separately, but careful consideration of how the three learning processes interact make for a better overall learning experience.

Physics-based puzzles with a “programming” phase (The Incredible Machine, Crayon Physics, Armadillo Run, etc.) {Player Model => Player Plan} World of Goo is in a class of its own, adding real-time action to the process (medium speed).

The Memento-like structure of YouDunnit turns the player’s model into the playing field. {Player Model => Player Plan and Game Plan}

SpyParty (playing the spy) {Player Model => Player Action}

These shortcuts are about facilitating or impairing the player’s learning, as well as making him build a false model that ultimately improves his experience. Games with these shortcuts satisfy the explorer side of the player. This is the domain of revelations, twists and conceptual breakthroughs. It’s often used in the games’ stories but rarely applied to their mechanics. {Game Goal => Player Model}

Examples: Inversing the effect of a spell by playing its notes backward in Loom; Having the last bullet in a clip do more damage in X-COM so as to generate more “heroic” last-ditch victories. {Game Plan => Player Model}

See also Brian Moriarty’s lecture on pareidolia in games.

SpyParty (playing the assassin) {Player Senses => Player Model}

Further ideas:

During this step, the player asserts whether the game and him pursue compatible goals – i.e. do they belong to the same culture, do they need to adjust to one another. It’s where the boundaries of Huizinga’s magic circle are tested (17).

The Player Outcome step is also where the metagame is: here the player reflects on the playing experience and on goals beyond the current game events. When this occurs during play, the player can experience double-consciousness as described in Katie Salen & Eric Zimmerman’s Rules of Play (pp 450-455): he can be immersed in the game while still having the awareness of interacting with it. So this step can also be used to communicate about the playing experience.